A selection of Projects:

Ubiquity: Journal of Pervasive Media.

http://www.intellectbooks.co.uk/journals/view-Journal,id=211/

http://www.ubiquityjournal.net/

Ubiquity is an international peer reviewed journal for creative and transdisciplinary practitioners interested in technologies, practices and behaviours that have the potential to radically transform human perspectives on the world. ‘Ubiquity’, the ability to be everywhere at the same time, a potential historically attributed to the occult is now a common feature of the average mobile phone. The title refers explicitly to the advent of ubiquitous computing that has been hastened through the consumption of networked digital devices. The journal anticipates the consequences for design and research in a culture where everyone and everything is connected, and will offer a context for visual artists, designers, scientists and writers to consider how Ubiquity is transforming our relationship with the world.

Editors:Mike Phillips, University of Plymouth / Chris Speed, Edinburgh University / Editorial Assistant: Jane Macdonald, Edinburgh University.

…

Qualia

Qualia is a ground-breaking new digital technology and research project, which aims to revolutionize the way audience experiences at arts and culture events are evaluated. The project will help us develop a greater understanding of the less tangible impacts of public engagement with arts and culture.

With funding from the prestigious Digital R&D Fund for the Arts, Qualia is developing new technology to enable the collection of audience profile information, live evaluation and feedback, and real-time measurement of impact indicators at arts and cultural events.

Cheltenham Festivals have hosted the development of the new Qualia app, which is being created by experts at Plymouth and Warwick universities. This project provides an opportunity for one of the foremost Festival organisations in the country to join forces with leading digital technology experts at Plymouth and evaluation and impact researchers from Warwick to look at how new technological developments can help us serve our audiences better. The ultimate aim is to create a transferable evaluation tool that allows arts and culture organisations to programme cultural events and on-going activities based on robust, real-time knowledge about audience responses, thereby enhancing the sector’s economic, cultural and social impact.

The Digital R&D Fund for the Arts is run by Nesta in partnership with Arts Council England and the Arts and Humanities Research Council.

…

Bio-OS

Bio-OS allows intimate biological information to be collected from the users body. This is achieved through:

- The use of biological databases that monitor dietary habits, through food consumption (calorie intake, etc) and exercise

- Biological sensors which measure psycho/physical changes within the body (psychogalvonometer, blood pressure, electrocardiogram, respiration, EEG, etc)

- Behavioural sensing, through audio visual monitoring (eye-tracking, speech patterns, motion tracking, etc)

- Temporal behaviour, through reflexive pattern tracking (models of activity over a period of time)

Bio-OS offers subtle and complex combinations of biological (in its broadest sense) sensing technologies to build data models of a body over time. These data models are stored locally as bioids and collected within the users personal data-base building a biological footprint alongside their individual ecological footprint. The users Avatar can be used to reflect and distribute the biological model.

…

Arch-OS

Arch-OS represents an evolution in intelligent architecture, interactive art and ubiquitous computing. An ‘Operating System’ for contemporary architecture (Arch-OS, ‘software for buildings’) has been developed to manifest the life of a building and provide artists, engineers and scientists with a unique environment for developing transdisciplinary work and new public art.

The Arch-OS experience combines a rich mix of the physical and virtual by incorporating the technology of ‘smart’ buildings into new dynamic virtual architectures.

…

Eco-OS

Eco-OS explores ecologies. Eco-OS further develops the sensor model embedded in the Arch-OS system through the manufacture and distribution of networked environmental sensor devices. Eco-OS provides a new networked architecture for internal and external environments. Networked and location aware data gathered from within an environment can be transmitted within the system or to the Eco-OS server for processing.

Eco-OS collects data from an environment through the network of ecoids and provides the public, artists, engineers and scientists with a real time model of the environment. Eco-OS provides a range of networked environmental sensors (ecoids) for rural, urban, work and domestic environments. They extend the concept developed through the Arch-OS and i-500 projects by implementing specific sensors that transmit data to the Operating Systems Core Database.

…

S-OS

S-OS is a collection of creative interventions and strategic actions that provide a new and more meaningful ‘algorithm’ for modelling social exchange, evaluation and impact, proposing a more effective ‘measure’ for ‘Quality of Life’.

The S-OS project provides an Operating System for the social life of a City, community, or cultural event. It superimposes the notion of an ‘OnLine’ Social Operating System onto ‘RealLife’ human interactions, modelling, analysing and making visible social value.

Whilst town planners and architects model the ‘physical’ City and Highways Department’s model the ‘temporal’ ebb and flow of traffic in and around the City, S-OS will model the ‘invisible’ social exchanges of the City’s inhabitants.

…

Dome-OS

http://dome-os.org/

i-DAT is developing a range of ‘Operating Systems’ to dynamically manifest ‘data’ as experience in order to enhance perspectives on a complex world. The Operating Systems project explores data as an abstract and invisible material that generates a dynamic mirror image of our biological, ecological and social activities.

At the core of these developments is the Immersive Vision Theatre, a transdisciplinary instrument for the manifestation of material, immaterial and imaginary worlds. The ‘Full Dome’ architecture houses two powerful high-resolution (fish-eye) projectors and a spatialised audio system.

A number of initiatives are being investigated to enable the rendering of real-world data into forms suitable for projection into immersive domed environments. Dome-OS preoccupation is with real-time, real-world data feeds and visualise these in a suitable manner for projection in a dome. Even as new game engine technologies open up opportunities for dome environments, the problem presents a series of complex challenges across multiple domains.

…

Exposure

http://www.mike-phillips.net/blog/exposure/

http://artsci.ucla.edu/?q=events/mike-phillips-lecture-exhibition-opening

Lecture + Exhibition Opening: 7 Mar 2012 2:00 pm – 16 Mar 2012 5:00 pm

Opening March 7 Lecture @ 2pm Exhibition Openings: 5-7pm Location: Lecture @ UCLA Broad Art Center, room 5240, Exhibition @ CNSI Gallery Exposure is an exhibition of work by Mike Phillips, Professor of Interdisciplinary Arts, School of Art & Media at Plymouth University.

The year that Eastman Kodak filed for bankruptcy protection was the same year Fujifilm moved from film production to beauty products. This did not just mark a technological shift from film grain to nanoparticles but also a massive cultural shift – a shift from capturing the face on film to the embedding of ‘film’ in the face.

The thing that once froze the face in an eternal youthful smile is now the anti-aging nanoparticle that preserves the face we wear. Barthes described the face on film as representing “a kind of absolute state of the flesh, which could be neither reached nor renounced”. Now this absolute state is closer to hand and we will walk around wearing our old photo albums as our face, peeling away the frames like layers of dead skin. Our essence, like Garbo’s, will not degrade or deteriorate.

…

‘spectre [ˈspɛktə/]’

http://www.mike-phillips.net/blog/spectre

Exhibition by: Mike Phillips.

‘spectre [ˈspɛktə/]’, Schauraum. Quartier21 (Electric Avenue), MuseumsQuartier, Museumsplatz 1/5, 1070 Wien, Austria. 27.01-18.03.2012.

Spectre explores the potential of data as material for manifesting things that lie outside our normal frames of reference – so far away, so close, so massive, so small and so ad infinitum. The spectre of Schwaiger is made manifest from the atomic forces that bind the Schauraum dust. A space dreams.

Spectre suggests that the Schauraum is such an architecture and that the memories of the building are bonded to its fabric by the atomic forces that have now been unlocked by the Atomic Force Microscope. Spectre builds on the collision of A Mote it is… and Psychometric Architecture by drawing on the experiences of Professor Gustav Adolf Schwaiger, the Technical Director of the Austrian Broadcast Corporation, and his collaboration with famous medium Rudi Schneider in the late 1930’s to the early 1940’s. “G.A. Schwaiger… conducted some private (and rather obscure) experiments with the famous medium Rudi Schneider in the studio of a female painter… In fact the flat could have been right above our exhibition space (Schauraum).”7 (Fiel, W. 2011).

According to Mulacz’s History of Parapsychology in Austria, “Schwaiger in his research focussed on investigating that ‘substance’ and its effects applied then state-of-the-art apparatus, such as remote observation by a TV set.”8 (Mulacz, P. 2000). That ‘substance’ was the ectoplasm that would emerge from Schneider mouth during their experiments. Spectre extends these experiments by broadcasting live feeds from the space of the Schauraum and simultaneously replaying the physical remnants of these happenings as captured in the atomic forces binding the dust from the their laboratory.

…

‘A Mote it is…’

http://www.mike-phillips.net/blog/a-mote-it-is/

‘A Mote it is…’ Art in the Age of Nano Technology, John Curtin Gallery, Curtin University of Technology, Perth, WA. 02-04/2010. (catalogue).

“A mote it is to trouble the mind’s eye.”

Words spoken by Horartio to describe Hamlet’s father’s ghost. In this Shakespearian play the ghost is seen but not believed and one is left to wonder if it is just the seeing of it that makes it real – its existence totally dependent on the desire of the viewer to see it. The ‘mote’ or speck of dust in the eye of the mind of the beholder both creates the illusion and convinces us that what we see is real. Something just out of the corner of our minds eye, those little flecks magnified by our desire to see more clearly. Yet the harder we look the more blurred our vision becomes.

A ‘mote’ is both a noun and a verb. Middle English with Indo-European roots, its early Christian origins and Masonic overtones describe the smallest thing possible and empower it with the ability to conjure something into being (so mote it be…). This dual state of becoming and being (even if infinitesimally tiny) render it a powerful talisman in the context of nano technology.

Throughout the last Century we were reintroduced to the idea of an invisible world. The development of sensing technologies allowed us to sense things in the world that we were unaware of (or maybe things we had just forgotten about?). The invisible ‘Hertzian’ landscape was made accessible through instruments that could measure, record and broadcast our fears and desires. Our radios, televisions and mobile phones revealed a parallel world that surrounds us. These instruments endow us with powers that in previous centuries would have been deemed occult or magic.

…

i-500 Project

http://arch-os.com/projects/i-500/

The i-500 is a collaborative project between Paul Thomas, Chris Malcolm and Mike Phillips who were commissioned to produce a sustainable, integrated, interactive art work from rich flows of research and general data generated through interaction in the new Curtin University Resources and Chemistry Precinct. This data will be the source material that is reflected through the architectural fabric and surface pattern of the space.

The i-500 project has established an interactive entity that inhabits the Resources and Chemistry Precinct at Curtin University of Technology. The i-500 is a reciprocal architecture, evolutionary in form and content, responding to the activities and occupants of the new structures.

…

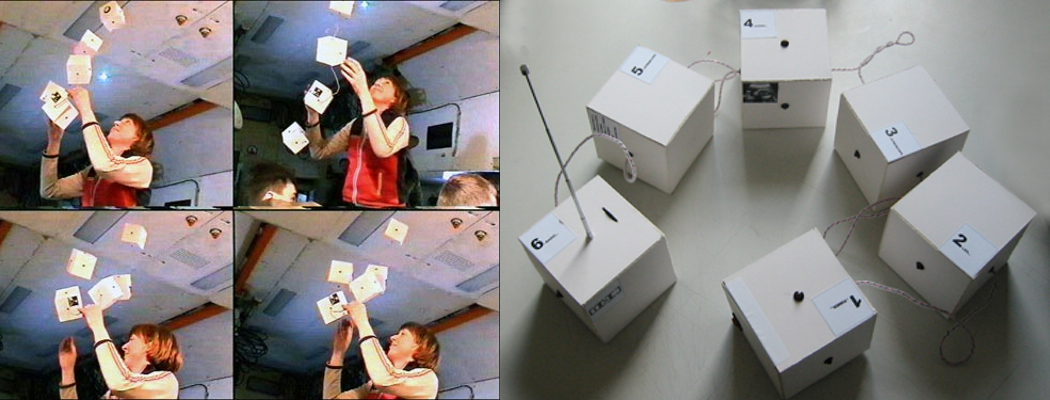

“Constellation Columbia”

http://www.mike-phillips.net/blog/constellation-columbia-modelprototype/

A prototype monument for ‘Dead Astronauts’. ZER0GRAVITY, A Cultural User”s Guide. Ed Triscott, N and La Frenais, R. The Arts Catalyst. 2005, 84-85. ISBN 0-9534546-4-9

Phillips, M. Constellation Columbia”, prototype monument for ‘Dead Astronauts’. zero gravity work for Parabolic flight from the Gagarin Cosmonaut Training. Centre, Russia. Courtesy of The Arts Catalyst: MIR Campaign 2003, Gagarin Cosmonaut Training Centre, Russia. MIR Campaign 2003 supported by the European Commission Culture 2000 Fund.

…

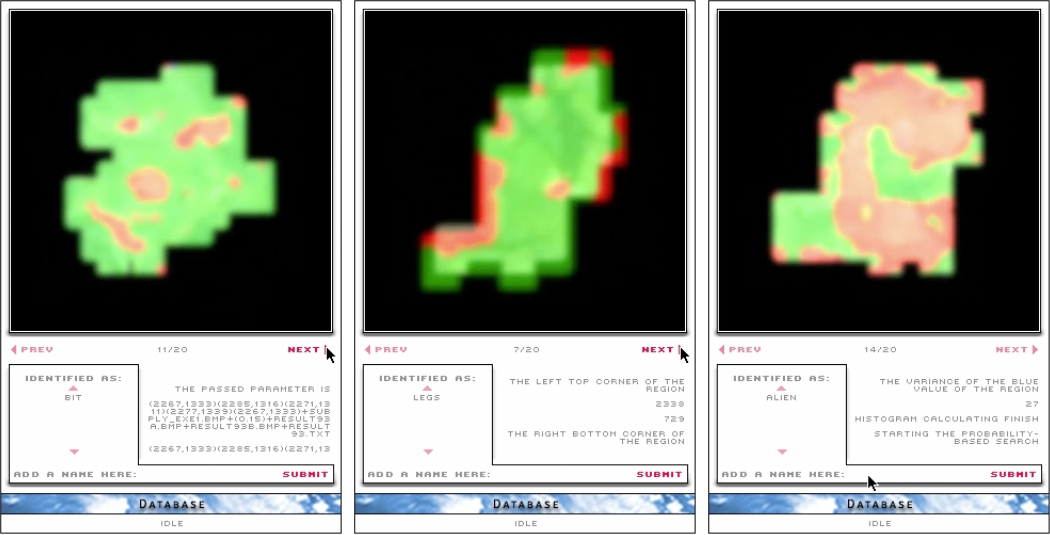

The Search for Terrestrial Intelligence

http://i-dat.org/2000-sti-search-for-terrestrial-intelligence/

S.T.I. turns the technologies that look to deep space for Alien Intelligence back onto Planet Earth in a quest for ‘evidence’ of Terrestrial Intelligence. Using satellite imaging and remote sensing techniques S.T.I. will scour the Planet Earth using similar processes employed by SETI (the Search for Extra Terrestrial Intelligence). Looking at Earth from space the project will develop processing techniques using autonomous computer software agents. In their search for evidence of intelligence the agents will generate new images, animations and audio (which may produce more questions than answers).

The S.T.I. Project Consortium brings together artists, scientists and technologists from four research groups (STAR, CNAS, ATR, NRSC) based in three organisations, the University of Plymouth, ATR Media Integration & Communications (Japan), and the National Remote Sensing Centre (NRSC). The S.T.I. Project is constructed by a Development Committee, which consists of eight individuals, they are: Mike Phillips (Project Co-ordinator), Geoff Cox and Chris Speed from STAR @ University of Plymouth: Dr Guido Bugmann and Dr Angelo Cangelosi from the Centre for Neural and Adaptive Systems (CNAS), @ University of Plymouth: Christa Sommerer and Laurent Mignonneau from ATR Media Integration & Communications: Dr Nick Veck: Technical Director, National Remote Sensing Centre.

Vision dominates our culture and lies at the heart of scientific and artistic endeavour for truth and knowledge. Increasingly the dominance of the human eye is being challenged by a new generation of technologies that do our seeing for us. These technologies raise critical questions about the nature of the truth and knowledge they elicit, and the way in which we interpret them. The S.T.I. Project goes beyond the irony of the search for terrestrial intelligence on Earth by engaging with our understanding of the ‘real world’ through our senses, whether real or artificially enhanced. Will these autonomous systems ‘know’ the ‘truth’ when they ‘see’ it?

The S.T.I. Project engages in critical issues surrounding the shift from the hegemony of the eye to the reliance on autonomous systems to do our seeing for us. This shift has an equal impact on scientific processes and creative endeavour. By turning away from ‘outer space’ to an examination of ‘our space’ the project also engages public interest, as expressed in the popular imagination through science fiction (X files, etc), in the alien within our midst. Do we recognise ourselves when seen through our artificial eyes.

…

‘Artefact’

http://old.i-dat.org/projects/artefact/

Artefact’ (http://www.i-dat.org/projects/artefact/) was commissioned through the DIGITAL RESPONSES exhibition at the V&A. Taking the fluidity of the museum artefact as its starting point the installation could be viewed from two perspectives: the internet, where it was interactive and malleable; and at Gallery 70 in the V&A, where it was viewed through a protective display case. At the core of the Artefact Project is a 3D database drawn from the V&A Collection. For the duration of the show the ‘Artefact’ evolves through a generative breeding of this ‘genetic’ information. At some point in its evolution the ‘Artefact’ will become the collection.

The Artefact project was a unique generative and site specific work in response to the commission. It has been presented in a number of contexts including: Phillips, M. All that is solid… melts. The liquefaction of form. Altered States: transformations of perception, place, and performance. University of Plymouth. 22-24/07/05. Proceedings published: Liquid Reader 3, Altered States. Ed: Phillips, M ISBN 1-84102-147-4. 22/07/05.

Phillips, M. Locative Media: Urban Landscape and Pervasive Technology Within Art, Art Gallery Panel. Siggraph 2006 Conference, Boston, 30/07/06Philllips. M. ‘Auto-Creativity V1.5: A slash and burn transmedia compression codec for artists and designers’. Teaching in a Digital Domain. Innovation, A National Symposium – Part 3 in collaboration with FEAR, ACUADS, ANCCA. Technology Park, CSIRO Theatre. Curtin University of Technology, Perth, Australia. Part of the BEAP [2002 Biennale of Electronic Arts Perth]. (11/07/02)

…

‘Autoicon’

http://www.iniva.org/autoicon/DR/

AUTOICON is a dynamic internet work that simulates both the physical presence and elements of the creative personality of the artist Donald Rodney who – after initiating the project – died from sickle-cell anaemia in March 1998. The project builds on Donald Rodney’s artistic practice in his later years, when he increasingly began to delegate key roles in the organisation and production of his artwork. Making reference to this working process, AUTOICON is developed by a close group of friends and artists (ironically described as ‘Donald Rodney plc’) who have acted as an advisory and editorial board in the artist’s absence, and who specified the rules by which the ‘automated’ aspects of the project operate.

AUTOICON is automated by programmed rule-sets and works to continually maintain creative output. Visitors to the site will encounter a ‘live’ presence through a ‘body’ of data (which refers to the mass of medical data produced on the human body), be able to engage in simulated dialogue (derived from interviews and memories), and in turn affect an ‘auto-generative’ montage-machine that assembles images collected from the web (rather like a sketchbook of ideas in flux). Through AUTOICON, participants generate new work in the spirit of Donald’s art practice; as well as offer a challenge and critique the idea of monolithic creativity. In this way, the project draws attention to current ideas around human-machine assemblages, dis-embodied exchange and deferred authorship – and raises timely questions over digital creativity, ethics and memorial.

Donald Rodney made considerable use of imagery such as x-ray photography, blood samples, cellculture, and so on, to draw attention not only to his medical condition that was slowly corroding his body, but more importantly as a metaphor to represent the ‘disease’ of racism that lay at the core of society. More information on the work of Donald Rodney, can be found on the web site, http://www.iniva.org/autoicon/DR/

AUTOICON has been produced by STAR, Science Technology Arts Research, University of Plymouth (Geoff Cox, Angelika Koechert, Mike Phillips); inIVA, Institute of International Visual Arts (Gary Stewart); and Sidestream (Adrian Ward); with contributions from Eddie Chambers, Richard Hylton, Virginia Nimarkoh, Keith Piper, Diane Symons; and with support from ACE, Arts Council of England (New Media Fund). (Thanks also to Pete Everett and Elliot Lewis).

…

Mediaspace

http://www.mike-phillips.net/blog/mediaspace/

The intent of ‘MEDIASPACE’, whether in its ‘dead’ paper-based form, or the ‘live’ digital forms of satellite and internet, is to explore the implications of new media forms and emergent fields of digital practice in art and design. ‘MEDIASPACEÍ is an experimental publishing project that explores the integration of print (‘MEDIASPACE’ is published as part of the CADE (Computers in Art and Design Education) journal Digital Creativity by SWETS & ZEITLINGER.), WWW and interactive satellite transmissions (‘MEDIASPACE’ interactive satellite transmissions were funded by the European Space Agency (ESA), the British National Space Centre (BNSC), and WIRE (Why ISDN Resources in Education) and use Olympus, EUTELSAT and INTELSAT satellites via a TDS-4b satellite uplink. (incorporating live studio broadcasts, ISDN based video conferencing, and asynchronous email/ISDN tutorials). The convergence of these technologies generates a distributed digital ‘space’ (satellite footprint, studio space, screen space, WWW space, location/reception space, and the printed page). There is also a novel and dynamic set of relationships established between the presenters (studio based), the participant/audience (located across Europe), and the reader. As an electronic publishing experiment in real time (‘live’ media) delivery, combined with a backbone of pre-packaged information (‘dead’ media content), the ‘MEDIASPACE’ transmissions provide a provocative model for the convergence of ‘publishing’, ‘networked’, and ‘broadcast’ forms and technologies.